In early August, an anonymous X account started making big promises about AI. “[Y]ou’re about to taste agi,” it claimed, adding a strawberry emoji. “Q* isn’t a project. It’s a portal. Altman’s strawberry is the key. the singularity isn’t coming. it’s here,” it continued. “Tonight, we evolve.”

If you’re not part of the hyperactive cluster of AI fans, critics, doomers, accelerationists, grifters, and rare insiders that have congregated on X, Discord, and Reddit to speculate about the future of AI, this probably sounds a lot like nonsense. If you are, it might have sounded enticing at the time. Some background: AGI stands for artificial general intelligence, a term used to describe humanlike abilities in AI; the strawberries are a reference to an internal codename for a rumored “reasoning” technology being developed at OpenAI (and a post from OpenAI Sam Altman minutes before that included a photo of strawberries); Q* is either a previous codename for the project or a related project; and the singularity is a theoretical point at which AI or technology more broadly becomes self-improving and uncontrollable. All this coming tonight? Wow.

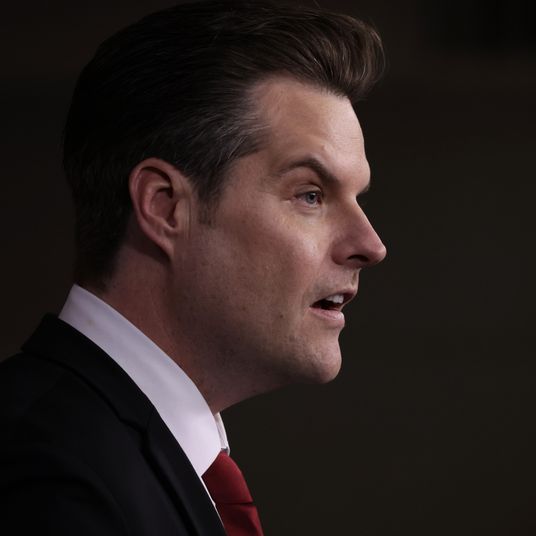

The specificity of the account’s many predictions got the attention of AI influencers, who speculated about who it might be and whether it might be an AI itself — a next-generation OpenAI model whose first human-level job is drumming up publicity for its creator. Its posts broke containment, however, after an unsolicited reply from Sam Altman himself:

For a certain type of highly receptive AI enthusiast, this tipped a plainly absurd scenario — an amateurish, meme-filled anonymous account with the guy from Her as an avatar announcing the arrival of the post-human age — into plausibility. It was time to celebrate. Or was it time to panic?

Neither, it turns out. Strawberry guy didn’t know anything. His “leaked” release dates came and went, and the community started to turn on him. On Reddit, the /r/singularity community banned mention of the account. “I was duped,” wrote SEO and peripheral AI influencer Marie Haynes in a postmortem blog post. Before she knew it was a fake, she said, “strawberry man’s tweets were starting to freak me out.” But, she concluded, “it was all for good reason … We truly are not prepared for what’s coming.”

What was coming was another mysterious account, posting under the name Lily Ashwood, who started appearing in live voice discussions about AI on X Space. This time, the account didn’t have to work very hard to get people theorizing. AI enthusiasts who had gathered to talk about what they thought might be the imminent release of GPT-5 — some of whom were starting to suspect they’d been duped — started wondering if this new character might herself be an AI, perhaps using OpenAI’s voice technology and an unreleased model. They zeroed in on her manner of speaking, her fluent answers to a wide range of questions, and her caginess around certain subjects. They attempted to out her, prompting her like a chatbot, and coming away uncertain of who, or what, they were talking to.

“I think I just saw a live demo with GPT 5,” wrote one user on Reddit, after joining an X Space with Ashwood. “It’s unbelievable how good she is. It almost fools you into believing it’s a human.” Egged on by Strawberry Guy, others started collecting evidence that Ashwood was AGI in the wild. OpenAI researchers had just co-signed a paper calling for the development of “personhood credentials” to “ distinguish who is real online.” Could this be part of that study? Her name had a clue, right there in the middle: I-L-Y-A, as in Ilya Sutskever, the OpenAI co-founder who left the company after clashing with Sam Altman over AI safety. Besides, just listen to the “suspicious noise-gating” and the “unusual spectral frequency patterns.” Superintelligence was right there in front of them, chatting on X:

It wasn’t. Ashwood, who declined a request for comment, described herself as a single mother from Massachusetts and released a video poking fun at the episode (which, of course, some observers took as further evidence she was AI):

But by then, even some prominent AI influencers had gotten caught up in the drama. Pliny the Liberator, an account that gained a large following by cleverly jailbreaking various AI tools — manipulating them to reveal information about how they work, and breaking down guardrails put in place by their creators — was briefly convinced that Ashwood might have been AI. He described the experience as psychologically taxing, and held a debriefing with his followers, some of whom were angry about what he, an anonymous and sometimes trollish account that they’d rapidly come to trust on the mechanics and nascent theology of AI, had led or merely allowed them to believe:

On one hand, again, this is easy to dismiss from the outside: A loose community of people with shared intuitions, fears, and hopes for a vaguely defined technology are working themselves into a frenzy in insular online spaces, manifesting their predictions as they fail to materialize (or take longer than expected). But even the very first chatbots, which would today be instantly recognizable as inert programs, were psychologically disorienting and distressing when they first arrived. More recently, in 2022, a Google engineer quit his job in protest after becoming convinced that an internal chatbot was showing signs of life. Since then, millions of people have interacted with more sophisticated tools than he had access to, and for at least some of them, it’s shaken something loose. Pliny, who recently received a grant in bitcoin from venture capitalist Marc Andreessen for his work, wondered if OpenAI’s release schedule had been slowed “because sufficiently advanced voice AI has the potential to induce psychosis,” and made a prediction of his own: “fair to say we’ll see the first medically documented case of AI-induced psychosis by December?” His followers were unimpressed. “Nah, i was hospitalized back in 2023 after gpt came out… 6 months straight, 7 days a week, little to no sleep,” wrote one. “HAHAHA I MADE IT IN AUGUST,” wrote another. Another asked: “Have you not been paying attention?”

The fundamental claim here — that AI systems that can talk like people, sound like people, and look like people might be able to fool or manipulate actual people — is fairly uncontroversial. Likewise, it’s reasonable to assume, and worry, that comprehensively anthropomorphized products like ChatGPT might cynically or inadvertently exploit users’ willingness to personify them in harmful ways. As possible triggers for latent mental illness, it would be difficult to come up with something more on the nose than machines that pretend to be humans created by people who talk in riddles about the apocalypse.

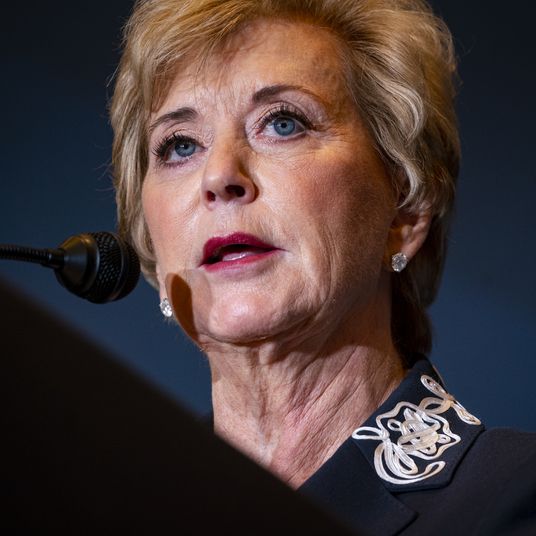

Setting aside the plausibility or inevitability of human-level autonomous intelligence, there are plenty of narrow contexts in which AI is already used in similarly misleading ways, to defraud people by cloning family members’ voices, or by simply posting automated misinformation on social media. Some OpenAI employees, perhaps sensing that things are getting a little bit out of control, have posted calls to, basically, calm down:

Whether companies like OpenAI are on the cusp of releasing technology that matches their oracular messaging is another matter entirely. The company’s current approach, which involves broad claims that human-level intelligence is imminent, cryptic posts from employees who have become micro-celebrities among AI enthusiasts, and secrecy about their actual product roadmaps, has been effective in building hype and anticipation in ways that materially benefit OpenAI. Much bigger models are coming, and they’ll blow your mind is exactly what increasingly skeptical investors need to hear in 2024, especially now that GPT-4 is approaching two years old.

For the online community of people unsettled, excited, and obsessed by the last few years of AI development, though, this growing space between big AI models is creating a speculative void, and it’s filling with paranoia, fear, and a little bit of fraud. They were promised robots that could trick us into thinking they’re human, and that the world would never be the same. In the meantime, they’ve settled on the next best thing: tricking each other.