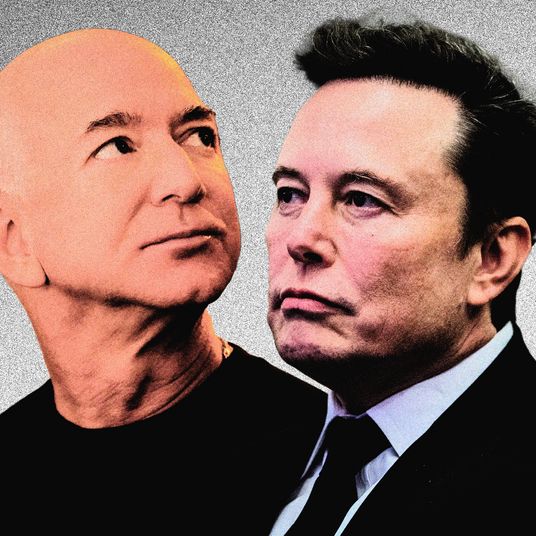

It’s an exciting time at the cutting edge of AI research. Massive companies are spending tens of billions of dollars to build, train, and deploy new AI models with which they hope to automate a wide range of tasks currently relegated to thinking humans. Researchers are considering questions about whether, and how, computers can reason or what it might mean for software to be meaningfully intelligent. It’s interesting and sometimes bracing stuff, and you really get the feeling, talking to people in the industry, that some of them feel as though they’re not just building the future but manifesting destiny, which is the sort of belief that might lead one to start telling eager customers to show “gratitude”:

While the future of AI is the subject of vigorous, stimulating, and productive debate, the rest of us on the internet, a few miles downstream from research labs, are confronting AI-related questions of our own, about the present and recent past. Questions like Is that image AI, or is it just sort of ugly? Is my student’s essay AI-generated, or is it just repetitive, low effort, and full of clichés? Is this menu AI, or is the food just kind of disgusting? Is this photo AI, or is her face just that symmetrical? Is this book AI, or was it just written by a cheap ghostwriter? (Or both?) Is that post from a stranger on social-media AI, or are people really just that dull? Is this email AI, or has my co-worker started taking lots of Adderall?

In the two years since the launch of ChatGPT, the general public’s dazzling introduction to generative AI, the tech industry has managed to keep the discourse in the future tense: New capabilities are imminent; new models are coming; today’s AI tools are the worst you’ll ever use. This is going to change everything. Maybe so! In the meantime, these in-progress, worst-ever generative-AI tools, many rushed into popular products by the big AI firms themselves, are very much being used, not just by people who want an early taste of new technology but by millions of people for the thousands of reasons people might want to quickly and cheaply generate text, images, or video clips.

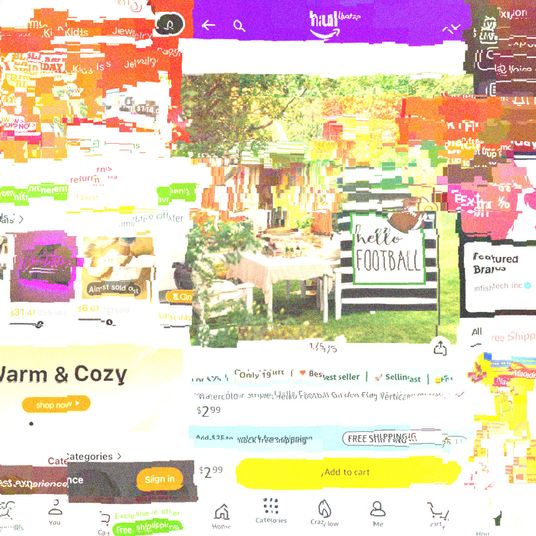

The result is mounting dissonance. Maybe your first experience with AI is an encounter with a chatbot that uncorks decades of sci-fi anticipation and fear around machine intelligence, prompting runaway sequences of thought — maybe optimistic, maybe apocalyptic — about an utterly changed world. But then maybe your next experiences with AI are a scammy Instagram weight-loss ad, a questionable AI-generated Google answer, a maybe-AI low-quality search result after the Google answer, and a customer-service chatbot that seems as if it might be human for the first messages in your exchange. Maybe it’s a post from a presidential candidate accusing immigrants of eating pets, hastily illustrated with synthetic kittens. Or maybe you’re just going to pick up a gallon of milk at the store and you see this:

Exciting, fast-changing tools with enormous theoretical potential are being used, in the real world, right now, to produce near-infinite quantities of bad-to-not-very-good stuff. In part, it’s a disconnect between forward-looking narratives and hype and lagging actual capabilities; it also illustrates a gap between how people imagine they might use knowledge-work automation and how it actually gets used. More than either of these things, though, it’s an example of the difference between the impressive and empowering feeling of using new AI tools and the far more common experience of having AI tools used on you — between generating previously impossible quantities of passable emails, documents, and imagery and being on the receiving end of all that new production.

I’m not really talking about AI slop here. Garbled and obviously generated AI garbage is abundant but by definition easy to spot and dismiss; as widely available AI tools have improved, the generation of egregious, attention-grabbing mutant content in 2024 is often a deliberate engagement strategy rather than the result of flawed tools and low effort. Nor am I talking about a scenario like the ones predicted with the rise of deepfakes and the subsequent availability of AI-powered image and video generators, in which the general public is defenseless against synthetic media, never sure if what everyone’s seeing is real or fake, manipulable by machines that can manufacture new realities, destroying the original in the process. What I’m talking about is something in the middle: the association of AI with content that nobody particularly wants to create or consume but that plays a significant (if ambient) role in daily life online.

This is, in one sense, a success story for AI. It’s proof that current-generation tools can be used for the unglamorous but economically valuable task of completing busywork and filling voids. It’s evidence that AI capabilities are moving up the value chain from spam and slop to, well, the stuff right above spam and slop. The flip side of this success is that AI is becoming synonymous with the types of things it’s most visibly used to create: uninspired, unwanted, low-effort content made with either indifference toward or contempt for its ultimate audience.

That AI tools can produce marketing emails or filler illustrations that are hard to pin down for sure as generated by AI is, again, a fairly remarkable development in technological terms. What’s distinctly unremarkable is the content itself. Apple’s recent iPhone announcement, which also marked the release of its new AI-centric iOS, focused on the company’s state-of-the-art AI models and powerful new tools for users, including image generation. The first demo of its image-generation tools did indeed represent a rare accomplishment: Apple has devised a novel, compute-intense way to generate generic-looking puppy illustrations that aren’t quite cute:

Earlier this year, researchers charted a drift in the use of the word bot on social media from 2007 to the present day. For years, it was most often a literal accusation of bothood, a suggestion that the subject was a machine. More recently, however, the word is intended as a general insult: to suggest that another user is botlike, unable to think for themselves and not worth engaging with. AI is undergoing a similar transformation: To describe a piece of media as AI-generated is just as often a claim about its aesthetic qualities as it is about its actual mode of production. To say that music is like AI is to say that it’s unoriginal; to suggest that a movie seems AI-generated is to say that it’s pandering and derivative.

Something might “look like AI” because it’s a little bit unbelievable, a little bit generic, or more generally off or bad. It’s a critique that aligns fairly well with the way generative AI works and gets trained: by recognizing and then replicating patterns in enormous amounts of data that already exist. Whether this alignment holds — whether the tendency of generative AI to reproduce cliché and banality remains as perceptible as it is today — is as relevant to the future of the technology as any benchmark.

In the tech world, for now, AI’s brand could not be stronger: It’s associated with opportunity, potential, growth, and excitement. For everyone else, it’s becoming interchangeable with things that sort of suck.