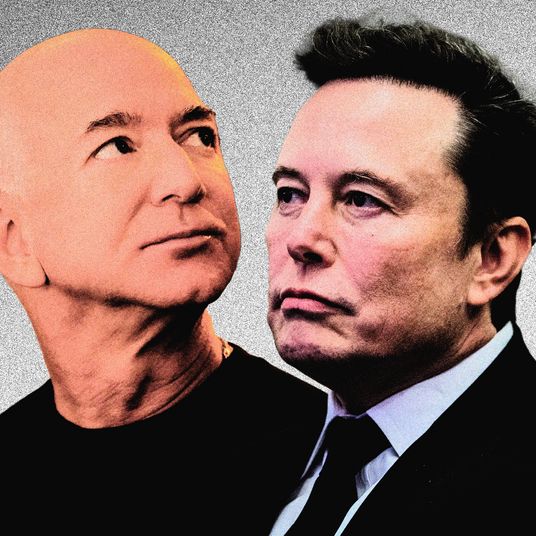

In pure organizational terms, OpenAI is a weird entity. It started as a nonprofit, raising more than $100 million dollars to spend on foundational AI research, before morphing into a “capped profit” corporation governed by a nonprofit and “legally bound” to pursue the nonprofit’s mission. In 2015, that mission was ” to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return.” In 2024, OpenAI says its “mission is to ensure that artificial general intelligence (AGI) — by which we mean highly autonomous systems that outperform humans at most economically valuable work — benefits all of humanity.”

This was, in hindsight, a pretty good deal for OpenAI, which has burned through billions of dollars building, training, and operating AI models. First, it got to raise a lot of money without normal pressure to pay it back. Then, as a for-profit firm wrapped in a non-profit, it raised huge amounts of money from Microsoft without the risk of control by or absorption into the nearly 50-year-old company. In the process of raising its next round of funding, which values the startup at more than $150 billion, the company has told investors it will transition to a for-profit structure within two years, or they get their money back.

If you take Sam Altman at his word, this process wasn’t inevitable, but it turned out to be necessary: As OpenAI’s research progressed, its leadership realized that its costs would be higher than non-profit funding could possibly support, so it turned to private sector giants. If, like many of OpenAI’s co-founders, early researchers, former board members, and high-ranking executives, you’re not 100 percent convinced of Sam Altman’s candor, you might look back at this sequence of events as opportunistic or strategic. If you’re in charge of Microsoft, it would be irresponsible not to at least entertain the possibility that you’re being taken for a ride. According to the Wall Street Journal:

OpenAI and Microsoft MSFT 0.14%increase; green up pointing triangle are facing off in a high-stakes negotiation over an unprecedented question: How should a nearly $14 billion investment in a nonprofit translate to equity in a for-profit company?

Both companies have reportedly hired investment banks to help manage the process, suggesting that this path wasn’t fully sketched out in previous agreements. (Oops!) Before the funding round, the firms’ relationship has reportedly become strained. “Over the last year, OpenAI has been trying to renegotiate the deal to help it secure more computing power and reduce crushing expenses while Microsoft executives have grown concerned that their A.I. work is too dependent on OpenAI,” according to the New York Times. “Mr. Nadella has said privately that Mr. Altman’s firing in November shocked and concerned him, according to five people with knowledge of his comments.”

Microsoft is joining in the latest investment round but not leading it; meanwhile, it’s hired staff from OpenAI competitors, hedging its bet on the company and preparing for a world in which it no longer has preferential access to its technology. OpenAI, in addition to broadening its funding and compute sources, is pushing to commercialize its technology on its own, separately from Microsoft. This is the sort of situation companies prepare for, of course: Both sides will have attempted to anticipate, in writing, some of the risks of this unusual partnership. Once again, though, OpenAI might think it has a chance to quite literally alter the terms of its arrangement. From the Times:

The contract contains a clause that says that if OpenAI builds artificial general intelligence, or A.G.I. — roughly speaking, a machine that matches the power of the human brain — Microsoft loses access to OpenAI’s technologies. The clause was meant to ensure that a company like Microsoft did not misuse this machine of the future, but today, OpenAI executives see it as a path to a better contract, according to a person familiar with the company’s negotiations. Under the terms of the contract, the OpenAI board could decide when A.G.I. has arrived.

One problem with the conversation around AGI is that people disagree on what it means, exactly, for a machine to “match” the human brain, and how to assess such abilities in the first place. This is the subject of lively, good-faith debate in AI research and beyond. Another problem is that some of the people who talk about it most, or at least most visibly, are motivated by other factors: dominating their competitors; attracting investment; supporting a larger narrative about the inevitability of AI and their own success within it.

That Microsoft might have agreed to such an AGI loophole could suggest that the company takes the prospect a bit less seriously than its executives tend to indicate — that it sees human-level intelligence emerging from LLMs as implausible and such a concession as low-risk. Alternatively, it could indicate that the company believed in the durability of OpenAI’s non-profit arrangement and that its board would responsibly or at least predictably assess the firm’s technology well after Microsoft had made its money back with near AGI; now, of course, the board has been purged and replaced with people loyal to Sam Altman.

This brings about the possibility that Microsoft simply misunderstood or underestimated what partner it had in OpenAI. Popular theories about AI risk posit that a sufficiently intelligence machine could eventually find that its priorities don’t align with those of the people who created it and might use its human-like, self-improving intelligence to compete with, deceive, or generally cause harm to actual people (depending on who’s talking, such theories can sound like reasoned risk assessment or something closer to projection). For now, though, Microsoft seems to be confronting a smaller, more familiar sort of alignment problem, in the form of human Sam Altman, who will emerge from OpenAI’s planned restructuring with even greater control over the organization. Having given OpenAI the resources to grow, but also the space to arbitrarily and advantageously redefine itself, it risks turning what was, on paper, in theory, a good investment, into a huge mess. Not to be a doomer about it, but: Maybe they should have known.

More Screen Time

- How Podcasting Became the New TV

- Amazon Just Built a Temu Clone. Why Isn’t It More Fun?

- Why the Government’s Google Breakup Plan Is Such a Big Deal